Markov and Chebyshev Inequalities

Contents

Markov and Chebyshev Inequalities¶

Markov Inequality¶

Let \(X\) be a non-negative random variable and \(a>0\) then the probablity that \(X\) is atleast \(a\) is less than equal to the Expectation of \(X\) divided by \(a\):

Proof. Consider

Tip

Markov’s inequality is not useful when \( a < E(x) \)

Chebyshev’s Inequality¶

Motivation:¶

Markov’s inequality does not consider left hand side of expectation ( \(\mu\)) and hence does not give any information on its distribution.

In case of a in left hand side of \( \mu \) ,therefore for all \(X\), \(\frac{\operatorname{E}[X]}{a} \leq 1 \)

This statement is not at all useful as it implies that the probability of the said event is less than 1 which is trivial.

So we want to define a bound on the probability, that X takes values large than expectation by a ( \(\mu + a\) ) and smaller than expectation by a ( \( \mu - a \) ).

Chebyshev’s inequality is a better version /improvement on Markov’s inequality.

Chebyshev’s inequality is given as:¶

We can analytically verify that on increasing \(\sigma\), probability of \(|X-\mathbf{E}[X]| \geq a\) increase as distribution spread out. Also, with an increase in \(a\), it is less probable to find \(X\) in that interval.

Proof. In markov’s inequality \(Y\) is non negative similarly, \( Y^{2}\) is also non negative.

We can say that

Hence

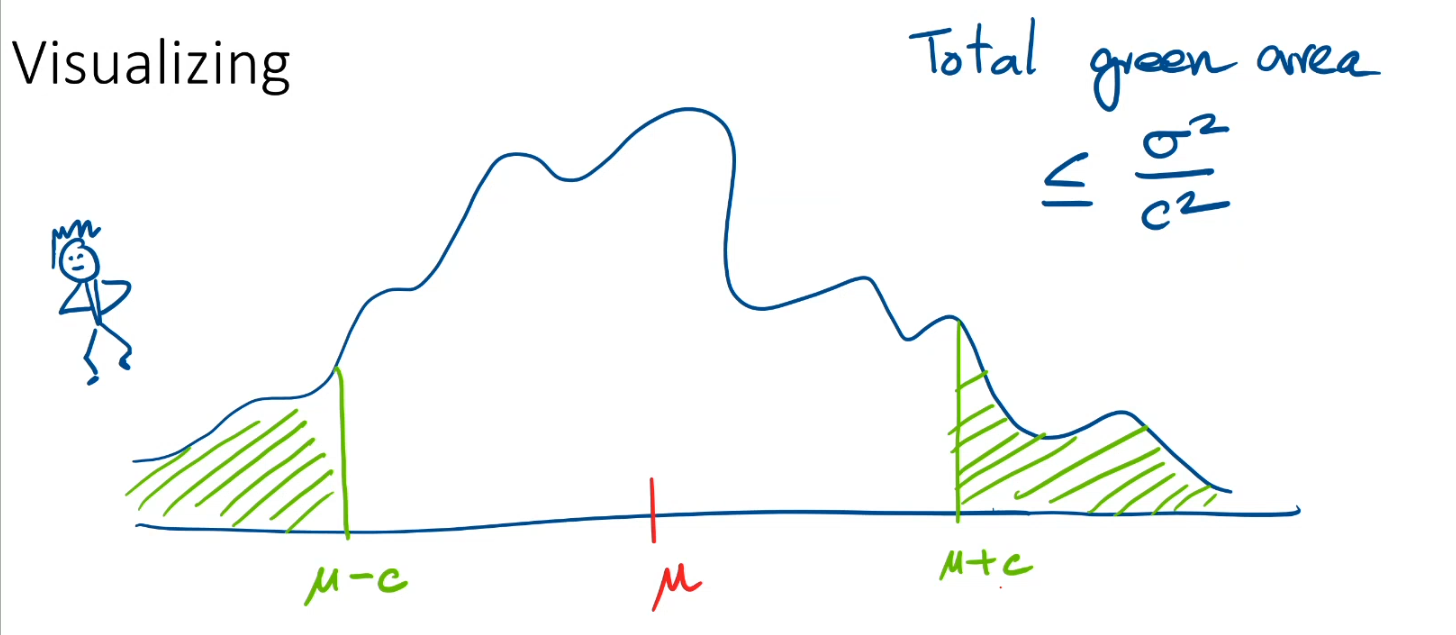

How do we visualize Markov’s Inequality and Chebyshev’s inequality?¶

Fig. 10 A Schematic of region bounded by chebyshev inequality¶

The area of left green shaded interval is less than \(\frac{\mu}{\mu +c}\)

The area of the toatal green-shaded interval is less than \(\frac{\operatorname{Var}[X]}{a^{2}}\) .

Typically Chebyshev’s inequality is stronger than Markov’s, but we can not say that in general, its true. In certain conditions, the reverse is true.

Moment Genrating Functions¶

In proof we saw that \((X-\mathbf{E}[X])^{2}\) is better than \((X-\mathbf{E}[X])\)

What is so special about working with \(X^{2}\) and why not other functions?

Moment generating functions of \(X\) are stronger bound than \(X^{2}\)

For a random variable \(X\), let \( Y = \text{exp}(tX) \) for some \(t \in \mathbb{R} \) . The moment generating function of \(X\) is defined as expectation of \(Y\).

Let us take the second derivative of \(E[e^{tX}]\) with respect to \(t\) at \(t=0\),

Similarly taking the \(k\)-th derivative of the moment generating function with \(t=0\), we can get the \(k\)-th moment of \(X\).